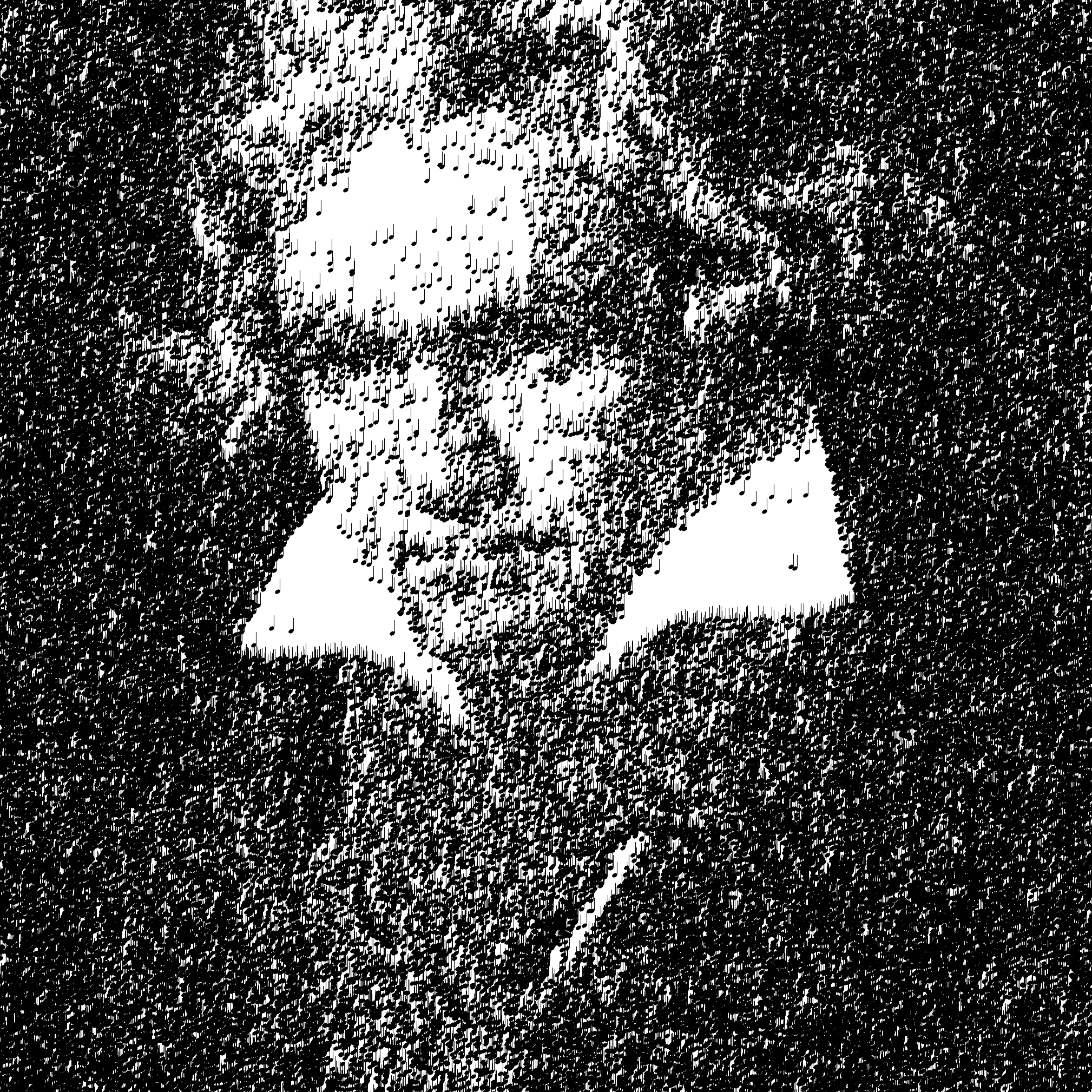

Violin Rendering

A little while ago, I had an idea to make pictures of musical subjects using only quarter notes, to give a kind of “parts and the whole” effect. I wrote a program to do this, so of course it’s repeatable for essentially any image. Check out some of the results:

The original implementation was pretty hacky and ran really slowly. The rough outline looked like this Python pseudocode:

image = open('violin.jpg')

note = open('note.png')

background = Image.new((width, height), white)

# slow!

def paste(bg, fg, loc):

fg_with_transparency = Image.new('RGBA', bg.size)

fg_with_transparency.paste(fg, loc)

return Image.alpha_composite(bg, fg_with_transparency)

for x in range(width):

for y in range(height):

if random.random() < 0.04 and

image[x, y][0] / 255. < random.random():

background = paste(background, note, (x, y))

background.save()For each pixel, I would find its grayscale value, and with some

random chance place a note there based on how dark that pixel was

supposed to be. However, the paste inside the inner loop

was super slow. Not only was I compositing full-sized 2048×2048 images

every time I added a note, I was also converting the note to RGBA

repeatedly so it would have transparency to paste with. This code took

several minutes to generate the violin image (and was even worse on

Beethoven, since he’s got way more notes).

116.04s user 31.55s system 98% cpu 2:30.30 totalEventually I found a way it was possible to do much, much better with

a fairly small change. Instead of using the custom paste

function above, I switched to ensuring notes were RGBA at loading time,

and then only alpha compositing the part of the image that was new, by

passing in an (x, y) position.

note = Image.open(r"note.png").convert('RGBA')

...

background.alpha_composite(note, (x, y))This meant the full code was also shorter, looking like this:

from PIL import Image

import random

background = Image.new('RGBA', (2048, 2048), (255, 255, 255, 255))

image = Image.open(r"violin.jpg").convert('RGBA').load()

note = Image.open(r"note.png").convert('RGBA')

for x in range(2048):

for y in range(2048):

if random.random() < 0.04 and image[x, y][0] / 255. < random.random():

background.alpha_composite(note, (x, y))

background.save('composition.png')These changes brought running time from a few minutes to about 1.5 seconds. They also didn’t require any tough algorithmic thinking or fancy data structures. Largely it was the mere result of carefully re-reading my own code.

1.36s user 0.05s system 98% cpu 1.436 totalSpeed is nice for its own sake, but it can also unlock creative

possibilities. For example, I was now able to generate 60 frames of an

animation in about two minutes, where with my original code this would

have taken over an hour. However, generating frames is an example of an

operation that can be done purely in parallel without thinking much

about the implementation at all. So, I pulled in

multiprocessing.

from PIL import Image

import random

import multiprocessing

background = Image.new('RGBA', (2048, 2048), (255, 255, 255, 255))

image = Image.open(r"violin.jpg").convert('RGBA').load()

note = Image.open(r"note.png").convert('RGBA')

def gen_frame(frame):

for x in range(2048):

for y in range(2048):

if random.random() < 0.04 and image[x, y][0] / 255. < random.random():

background.alpha_composite(note, (x, y))

background.save(f'out/{frame}.png')

if __name__ == '__main__':

pool = multiprocessing.Pool()

pool.map(gen_frame, range(60))With this parallelization added to the mix, I went from “over an hour” to “under 20 seconds” to render 60 frames of a 2048×2048 image, and it was almost completely painless—just two fairly trivial steps. (The gif below has only 24 frames, to make it smaller.)

220.15s user 3.98s system 1266% cpu 17.701 total

The important part isn’t really the one-off rendering speed—I’m okay with letting something sit overnight to get a cool result. However, iteration time is also important. The faster you can see results, the faster you can spot things you either like or want to change about what you’ve done.

Here, I really like how the animation “averages out” some of the randomness. That means the viewer can see more detail. Parts of the bridge and strings look almost three-dimensional, even though we’re still only using black notes on a white background (over time). You can even start to make out the F-holes, and some of the more-obvious shading from the original image. I probably wouldn’t have cared enough to discover this if it took hours to render.

P.S.

I got curious about one last thing. How many notes are there in the average violin generated by this program? This is another question that’s only fun to answer if things run reasonably quickly. I generated 100 frames in parallel, had them count up their notes (instead of actually compositing them), and took the average: about 15,850 notes per frame. In a 60fps animation, you’re seeing just under a million notes per second.

186.40s user 3.59s system 1076% cpu 17.654 totalThis means my dinky little Python program tallied up ~1.6 million notes in under 20 seconds. Not bad!